Paul Romer’s economist and salesman is not so enthusiastic about artificial intelligence – and he doesn’t trust her either.

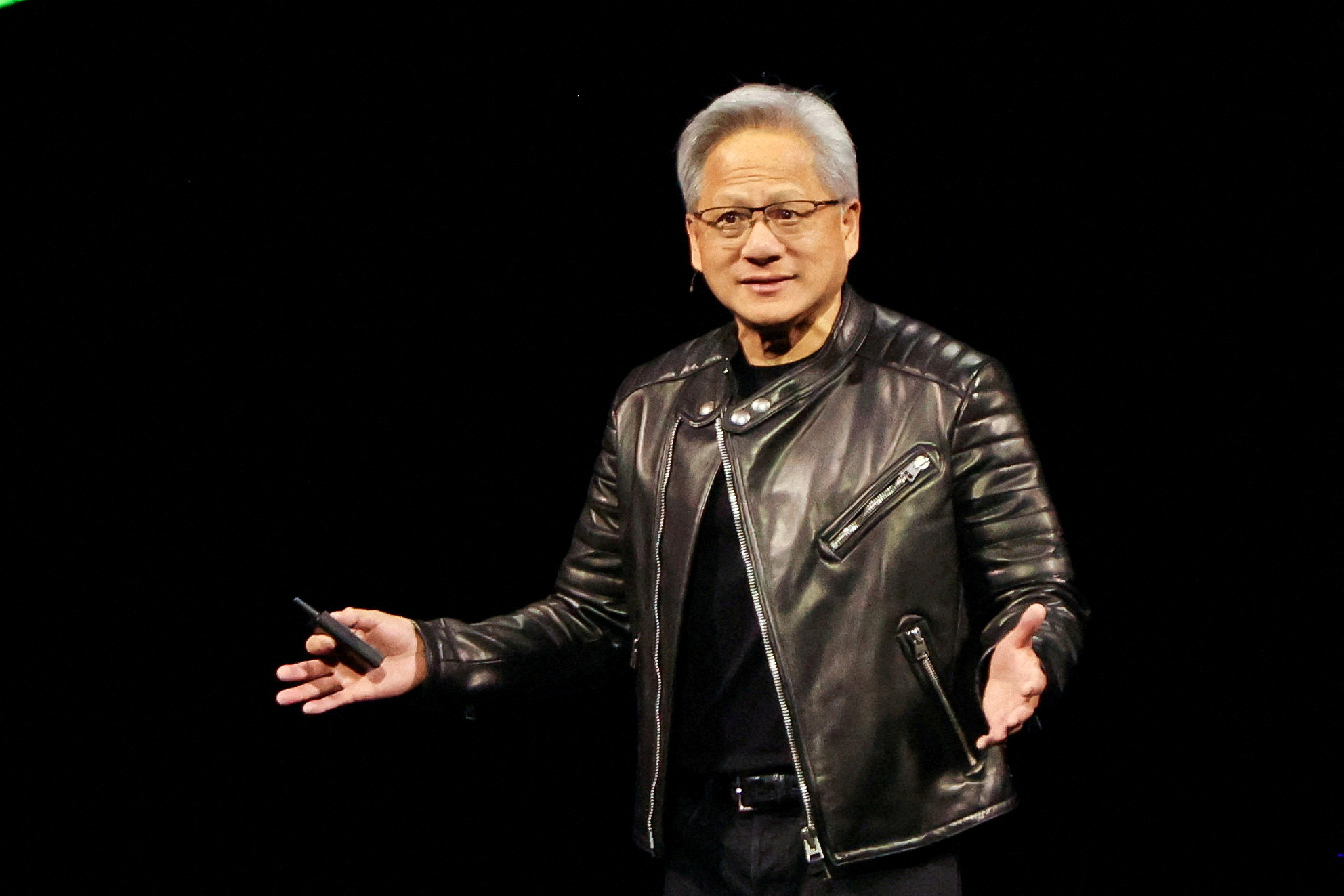

In a panel at the Febraban Tech event, Boston College professor challenged the enthusiasm with which technology has been treated. And it gives two advice: that computing scientists and developer companies of these technologies are not heard.

“Computer scientists because they are interested in the new things they can do,” he said. “And companies because they want to sell what they have created with computer systems. They are selling tools where the first thing you should realize is when there is a mistake.” And that, evaluates Romer, has not happened.

Continues after advertising

For him, Generative AI mechanisms generate seemingly reliable answers that hide errors. “Models are not improving to reach 100% accuracy. They are stuck, and we are feeding them with increasingly data. We will never reach the point of accuracy,” he says.

The conclusion is that, in the end, everything is mathematical, since in the world of codes, there can always be errors in the hundreds of thousands of possible data combinations.

This ability of artificial intelligences to produce inaccurate but convincing answers would be a risk to institutions seeking “truth” in Romer’s assessment. Science (mainly), the judiciary and journalism would be some of them.

Continues after advertising

“Historically, humans have created institutions, social systems that encourage people, help to progress with the truth. The science system that emerged after the scientific revolution is by far the most important,” he said. “The biggest risk we face now is to lose this system that has helped us so well in recent centuries.”

Although it does not discard that models can be useful, Romer believes that people and companies need to calculate better how costly the errors promoted by the use of artificial intelligence. And these costs can be high. “We are using software to move large metal pieces such as cars and aircraft, where errors are very costly.” He summarizes, “Machine Learning is not reliable.”