If, on the one hand, these technologies have positive applications, on the other, they pose a growing risk of misinformation, digital blows and reputation attacks

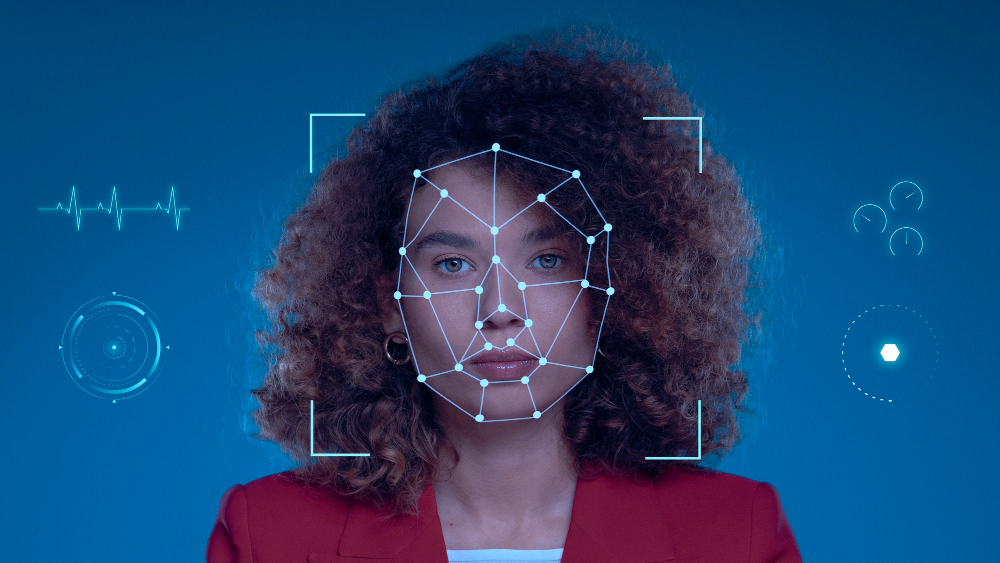

In recent years, (IA) has brought extraordinary advances to areas such as education, safety and even entertainment. However, the same feature that creates realistic movies and impeccable voiceovers can also be used to deceive. We are talking about — Videos manipulated by AI capable of simulating people, voices and scenarios almost perfectly.

If, on the one hand, these technologies have positive applications, on the other, they pose a growing risk of misinformation, digital and reputation attacks. But after all, how to identify if a video was generated by AI? Next, I present 10 practical tips that can help the public recognize signs of digital manipulation:

1. Facial movements

AI still fails to reproduce very subtle expressions. Note if the face muscles look rigid, without microexpressions or natural tremors.

2. Synchrony between mouth and voice

Often the movement of the lips does not perfectly follow the words. Be aware of delays or speeches without natural breaks.

3. Artificial eyes

Too fixed, rare blinks or poorly natural eyes are classic evidence of manipulated video.

4. Light and inconsistent shadows

If the shadow does not accompany the movements of the face, or the lighting seems strange about the environment, be wary.

5. Voice “Robotics”

AI -generated voices may sound monotonic, with little emotion, strange rhythm and misplaced breaths.

6.

In lower quality videos, hair, teeth and ears may have smudges, distorted pixels or edge failures.

7. Body inconsistency

The face may be well produced, but the body, hands and gestures reveal flaws: extra fingers, locked or disproportionate movements.

8.

Ask yourself: was the person really in this place? Is the content compatible with your history? Scammers often use deepfakes to simulate speeches that never happened.

9.

Changes in angle or cuts can expose sudden failures in the person’s appearance or scenario.

10. Check tools

There are already solutions that help check the authenticity of videos, such as Deepware, Sensity AI e Hive Moderation. In addition, large video platforms are implementing automatic detectors.

A critical look is essential

Deepfakes They are not just a technological problem: they challenge confidence in human, political and institutional relations. It is critical that citizens, companies and governments develop a critical posture, combining care attentive with detection tools.

As I often highlight in my analysis, digital education is the first line of defense. Recognizing the signs of manipulation is a way of protecting yourself and society against the advancement of false news and digital blows.

Want to deepen the subject, have any questions, comment or want to share your experience on this topic? Write for me on Instagram: .

*This text does not necessarily reflect the opinion of the young Pan.