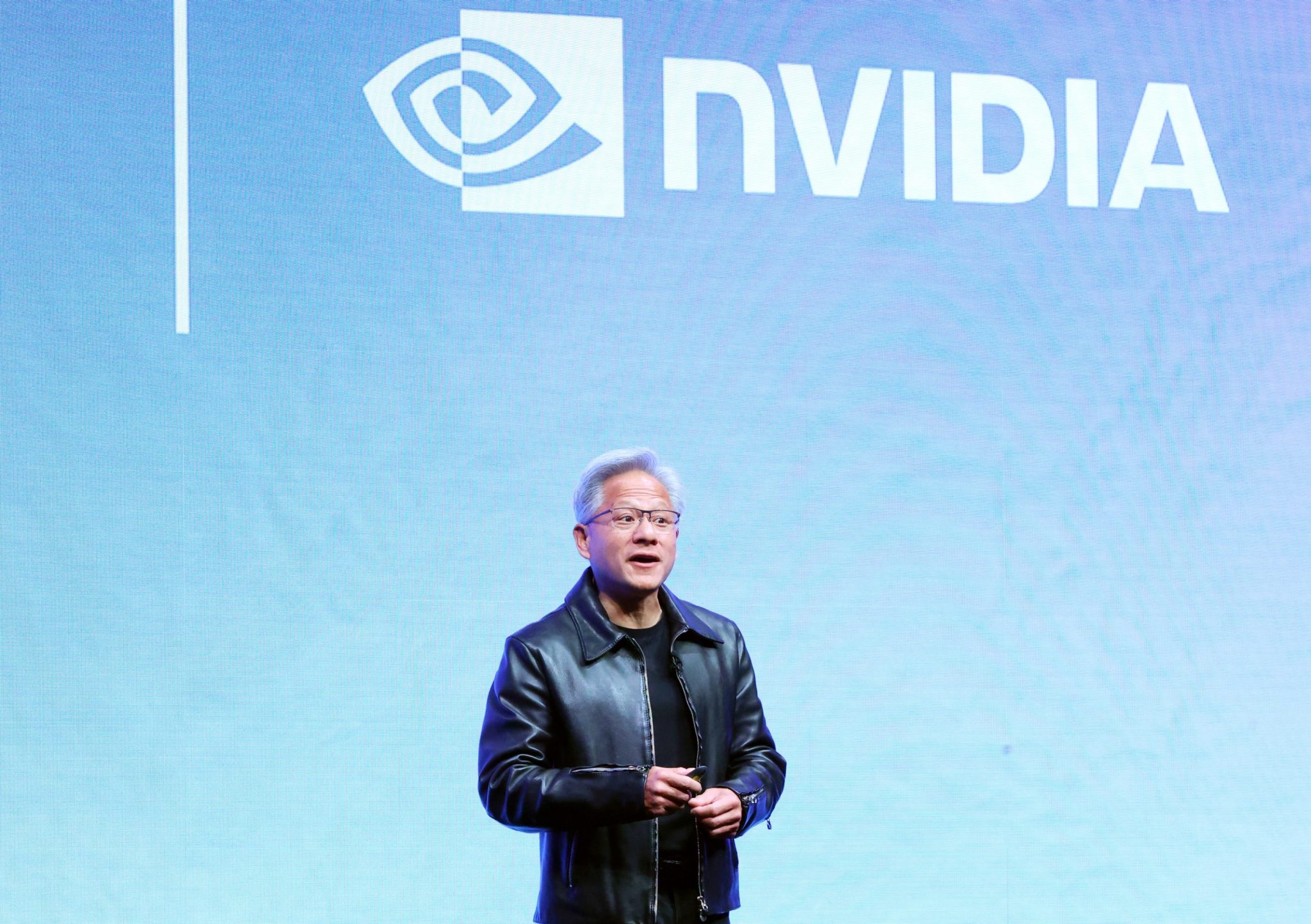

LAS VEGAS, United States, Jan 5 (Reuters) – Nvidia Chief Executive Jensen Huang said on Monday that the company’s next generation of chips is in ‘full production’, saying that they can offer five times more computing power for artificial intelligence functions than the company’s previous processors.

In a speech at the Consumer Electronics Show in Las Vegas, the leader of the world’s most valuable company revealed new details about future chips, which will arrive later this year and which Nvidia executives told Reuters are already in the company’s labs being tested by AI companies.

The Vera Rubin platform, made up of six separate Nvidia chips, is expected to launch later this year, with the flagship device containing 72 of the company’s flagship graphics units (GPUs) and 36 of its new central processors (CPUs). Huang showed how they can be grouped into ‘pods’ with more than 1,000 Rubin chips.

To achieve the new performance results, however, Huang said Rubin chips utilize a proprietary type of data that the company hopes the industry at large will adopt.

“That’s how we were able to deliver such a gigantic increase in performance even though we only had 1.6 times the number of transistors,” said Huang.

While Nvidia still dominates the market for training AI models, it faces much more competition – from traditional rivals like AMD as well as customers like Google – in delivering the fruits of these models to hundreds of millions of users of chatbots and other AI technologies.

Continues after advertising

Much of Huang’s speech focused on how well the new chips will work for this task, including the addition of a new layer of storage technology called ‘context memory storage’, with the aim of helping chatbots provide faster answers to questions and long conversations when used by millions of users at the same time.

Nvidia also introduced a new generation of network switches with a new type of connection called ‘co-packaged optics’. The technology, which is essential for connecting thousands of machines into one, competes with offerings from Broadcom and Cisco Systems.

In other announcements, Huang highlighted a new automotive AI model that can help self-driving cars make decisions about which way to go, leaving a documented record for engineers to use later.

Continues after advertising

Nvidia presented research on the model, called Alpamayo, late last year, and Huang said on Monday that it will be rolled out more widely, along with the data used to train it, so that automakers can make assessments.

“Not only do we open source the models, but we also open source the data we use to train these models, because only in this way can you truly trust how the models were created,” Huang said in Las Vegas.