Columbia University

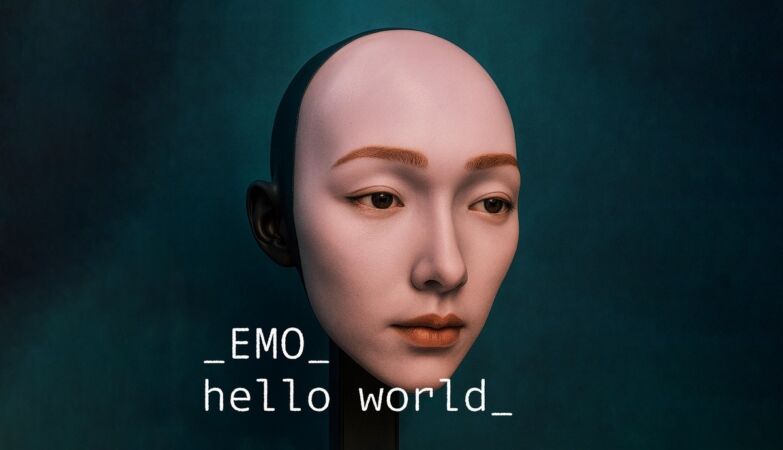

EMO, Columbia Engineering’s lip-sync robot

Researchers at Columbia University have designed a robot capable of realistic lip movements when speaking and singing. The robot used its abilities to articulate words and even sing a song from its AI-generated debut album — but still somewhere in the “Uncanny Valley”.

Previous studies have shown that most people focus on lip movements during face-to-face conversations.

However, creating robots that can replicate these lip movements continuously represents a challenge, and even the most advanced robots currently available on the market, when they communicate, at best only produce gestures. puppet-like.

Now, a team of researchers from Columbia University, led by Hod Lipson e Sally Scapais producing robots that aim to overcome these limitations.

At this stage, the team’s creations still seem inanimate, or even unsettlingbecause their facial expressions do not correspond to human expectations, invoking a phenomenon known as “Uncanny Valley“, something like “Uncanny Valley”, in free translation — the slight strangeness we feel when faced with the representation of a human who “It’s almost there” but it’s not perfect.

The team’s work, detailed in a publication published last week in the magazine Science Roboticsreveals how his robot used its skills to articulate words in different languages and even sing a song from their AI-generated debut album, ““.

Inside the Uncanny Valley

So what exactly is Uncanny Valley? “It’s that strange feeling what you have when you observe a robot trying to look humanbut something essential is missing”, explains Lipson to .

“I think that half the problem lies in lip movementbecause half the time that humans engage in face-to-face conversations, they stare at the interlocutor’s lips,” said Lipson.

“To date, robots don’t have lipsand most don’t even have faces. Our EMO robot It’s far from perfect, but I think it’s on the way to cross the strange valley”, he adds.

Unlike traditional approaches, which rely on rigid programming and predefined rules, the Columbia team’s robot learn through observationthat of humans in action.

Initially, the robot was designed to practice in front of a mirror, experimenting with its 26 facial muscles to help you “learn” how your own face moves.

Once familiar with its own expressions, the robot observed hours of videos of humans talking and singinglearning about the exact synchronization and coordination of lip movements.

“We don’t program the engines directly. Instead, we robot AI learns over time how to move engines by observing humans and then observing yourself in the mirror, and comparing,” explains Lipson.

After this training, the robot demonstrated the ability to translate audio directly in synchronized lip-motor action.

“Robots improve the more they interact with humans“, explained Lipson in one from Columbia Engineering. “This learning-based approach allows the robot continually refine your expressionsjust as a child learns by observing and imitating adults.”

“The robot’s facial motors are scattered under the robot’s faceand are designed to allow the robot to make a wide variety of facial gestures, including lip movement, smiling and other movements,” Lipson added.

Achieving this type of human-like lip movement requires flexible facial “skin” and many small engines capable of rapid and silent movement.

The intricate patterns of lip movement are determined by vocal sounds and phonemesa type of choreography that humans use to perform these movements effortlessly through dozens of facial muscles.

By combining a highly motorized face with a vision-to-action learning model, Columbia’s robot overcome these obstacles: first explored random facial expressions, then expanded and refined his ability to observe humans, building a model that links auditory cues to motor movements accurate.

In its current state, technology still requires some improvementsas indicated by the challenges the robot experiences when producing the sounds “B” and “W”. Nevertheless, the system has made enormous strides beyond the speech capabilities of other robots currently on the market.

“This is the missing link in robotics” said Lipson. “Much of the effort in developing humanoids focuses on walking or grasping, but facial expression is essential for human connection“.