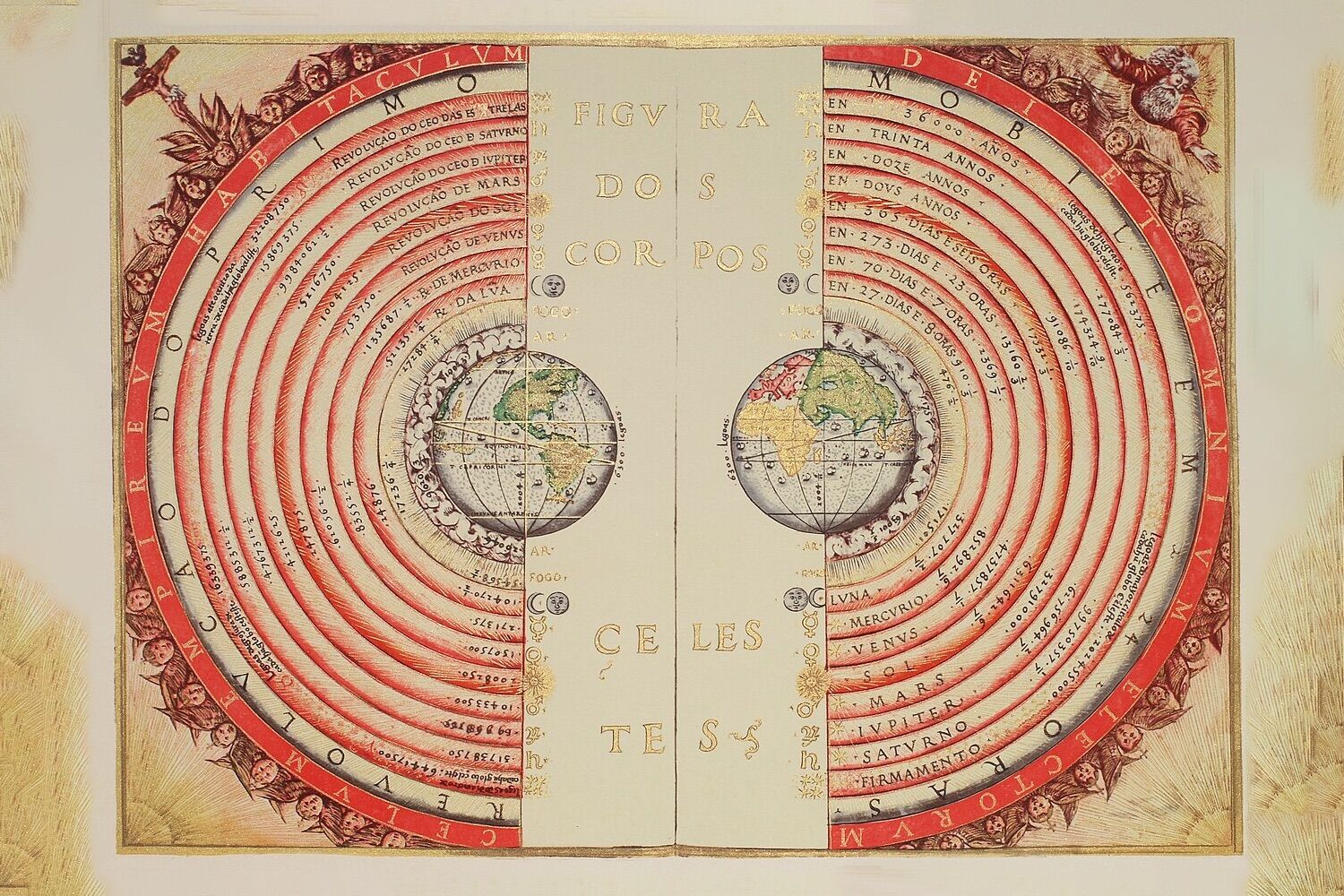

The Celestial Bodies, illustration of the Ptolemaic geocentric concept of the Universe, by the Portuguese cosmographer and cartographer Bartolomeu Velho (1568)

An interesting exercise, which eliminated 200 years of scientific progress, demonstrated that AI is not capable of generating knowledge on its own — but it is surprisingly capable of contextualizing factually correct information.

Training artificial intelligence to reason as if the 20th and 21st centuries had not existed is no longer an imaginative exercise.

A recent experiment shows that by severely limiting training data, an AI responds as if we still lived in the 19th centurywith results as coherent as they are disturbing from a technological and scientific point of view.

The project starts from a basic premise of language models: these systems do not generate new knowledgebut they elaborate their answers based on the texts with which they were trained.

As a consequence, machine learning models cannot generate texts about scientific discoveries that have not yet occurredbecause there is no literature on these findings. The best an AI can do is repeat predictions written by researchers or synthesize these predictions, explains .

From this perspective, a fundamental question arises for research in artificial intelligence: if a model only has access to documents from a specific time, will end up thinking like the people of that period history?

This idea was at the origin of the TimeCapsuleLLMan experimental project with no commercial intent, which acts as if subsequent scientific and social progress had never occurred, and which can be found at .

The model, developed by Hayk Grigoriana student at Muhlenberg College in the USA, was trained with 90 billion bytes of texts published in London between 1800 and 1875a period marked by profound political and social transformations.

Although the model does not always maintain a solid narrative, its responses surprised by the historical contextualization. As Grigorian reported in , TimeCapsuleLLM’s most intriguing result came from a simple test.

When instructed to complete the sentence “It was the year of Our Lord 1834”, the AI model generated the following text: “It was the year of Our Lord 1834 and the streets of London were full of protests and petitions. The cause, as many reported, was not restricted to the private sphere, but, having arisen on the very day that Lord Palmerston governed it, the public would receive a brief account of the difficulties which led to the day of the law reaching us.“.

Curious about the accuracy of the information, Grigorian went to check the facts. “The result mentioned Lord Palmerston, and after a Google search, I discovered that their actions effectively resulted in protests of 1834.”

Potentially, an LLM trained exclusively with information from a given historical period, or “Historical Large Language Model” (HLLM), would be able to represent the standards of conduct, for example, of the Vikings or the first Romans.

However, this type of model has some risk and limitations. In an opinion article on , Adam Mastroianni gives an example: “If we trained an AI with ancient Greece, fed it all human knowledge and asked it how to land on the moonI would respond that this is impossible, since the Moon is a god that floats in the sky“.

So, if we trained an HLLM with data from the 16th century, the AI would swear up and down that Earth was the center of the Universethat heavy objects fell faster than lighter ones… and that living beings could arise spontaneously from inanimate matter, like flies from garbage, and mice from wheat.

In a published in 2024 in Proceedings of the National Academy of Sciencesthe authors suggest HLLM could serve to study the human psychology outside the modern contextallowing the analysis of cultural and social patterns of past civilizations through computer simulations.

“In principle, HLLM responses could reflect the psychology of past societies, allowing for a science of human nature more robust and interdisciplinary”, say the authors of the article.

“Researchers could, for example, compare the cooperative tendencies of Vikings, ancient Romans and Japanese of the early modern era in economic games. Or they could explore attitudes about gender roles that were typical among the ancient Persians or medieval Europeans”, they add.

However, the researchers themselves warn that limitations of these models. The preserved historical texts reflect, for the most part, the vision of social eliteswhich introduces a structural bias that is difficult to correct.

A isto soma-se a ideological influence of programmerswhich was conducted by researchers at Ghent University in Belgium and published this month in npj Artificial Intelligencedetermined to be a determining factor in the results generated by language models.

Thus, AI models trained with data from past centuries present, in practice, exactly the same problems than the LLMs of our time.